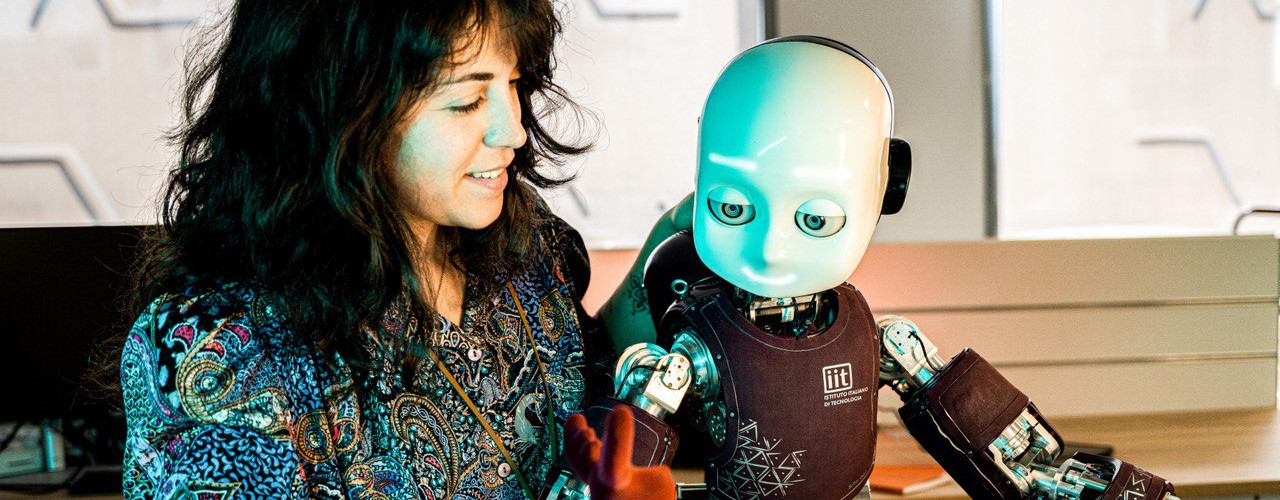

Visual attention is essential for humans and animals interacting with the environment. Robots can similarly take advantage of attentional mechanisms, to autonomously select and react to external stimuli. We are developing an attention system for iCub that will be able to direct its gaze to regions of the field of view where there are potentially interesting stimuli, for example, objects that are moving or reachable. To do so, we take inspiration from models of primates’ visual attention and adapt them to the event-driven paradigm. we are working on their full implementation on spiking neuromorphic hardware towards the design of a low-latency, low cost, attentive module.

We developed a bio-inspired bottom-up event-driven saliency-based model following the “Gestalt laws” to detect possible objects in the scene. The Gestalt laws define how human beings perceptually group entities in the scene to understand and interact with the environment. We adapted the original bio-inspired model for frame-based cameras to work with the event-driven cameras. Our proto-object saliency pipeline is fully bio-inspired, and it runs online on iCub.

We added depth information, using a bio-inspired Disparity Extractor based on Cooperative Networks for event-driven cameras. The model runs online onto the robot with low latency (~100 ms) and prioritizes objects closer to iCub.

The spiking implementation of the proto-object saliency model running on the neuromorphic platform SpiNNaker shows a latency of only ~17ms.

Bio-inspired bottom-up proto-object saliency models, Cooperative stereo-matching for stereo, Spiking Neural Networks.

EVENT-DRIVEN PROTO-OBJECT SALIENCY MODELS

E. Niebur - Johns Hopkins University

EVENT-DRIVEN BIO-INSPIRED ATTENTIVE SYSTEM FOR ICUB HUMANOIDS ROBOT ON SPINNAKER

A. Perrett, S. Furber - Advanced Processor Technologies Research Group, The University of Manchester

PROTO-OBJECT BASED SALIENCY FOR EVENT-DRIVEN CAMERAS IN THREE-DIMENSIONAL SPACE

S. Ghosh - Technische Universitat Berlin

LOIHI NEUROMORPHIC HARDWARE

Y. Sandamirskaya - Intel Labs

D'Angelo G., Janotte E., Schoepe T., O'Keeffe J., Milde M.B., Chicca E., Bartolozzi C. Event-Based Eccentric Motion Detection Exploiting Time Difference Encoding, Frontiers in Neuroscience [DOI]

Iacono M., D'Angelo G., Glover A., Tikhanoff V., Niebur E., Bartolozzi C. Proto-object based saliency for event-driven cameras, IEEE International Conference on Intelligent Robots and Systems [DOI]